Rust: Systems Programming for Everyone

Felix Klock (@pnkfelix), Mozilla

space: next slide; esc: overview; arrows navigate http://bit.ly/2qgUIle

What is Rust?

What is Rust?

New programming language

- (... and ecosystem, and development community)

Emphasizing control, safety, and speed

- (... especially when it comes to concurrency)

Low-level hacking without fear of segfaults nor data races

- or more simply: "Hack without Fear"

Why ...?

Why use Rust?

- Fast code, low memory footprint

- Go from bare metal (assembly; C FFI) ...

... to high-level (closures, generic containers) ...with zero cost (no GC, unboxed closures, monomorphization); no runtime, cross-lang interop

- Safety and Parallelism

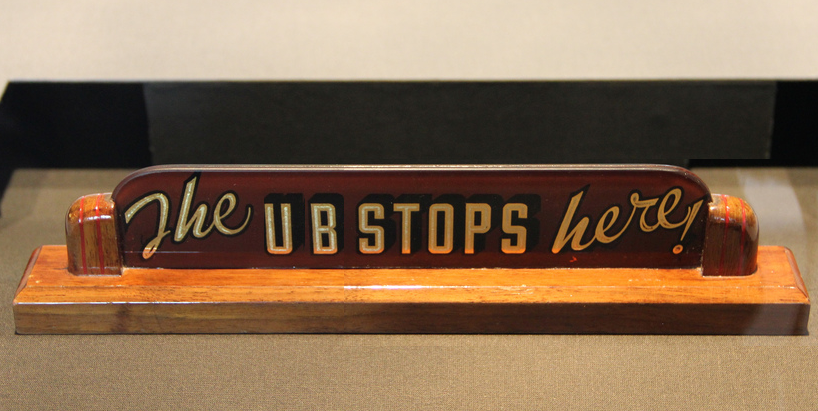

(apologies to Harry S. Truman)

Safety and Parallelism

Safety

No segmentation faults

No undefined behavior

No data races

(Multi-paradigm) Parallelism

msg passing via channels

shared state (R/W-capabilities controlled via types)

use native threads... or scoped threads... or work-stealing...

Let's Jump In: Safety

C++

int gather_negs(std::vector<int> &input, std::vector<int> &out) {

int count = 0;

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) { out.push_back(-val); count++; }

}

return count;

}Java

static int gather_negs(ArrayList<Integer> input, ArrayList<Integer> out) {

int count = 0;

for (int val: input) {

if (val < 0) { out.add(-val); count++; }

}

return count;

}Rust

fn gather_negs(input: &Vec<i32>, out: &mut Vec<i32>) -> isize {

let mut count = 0;

for val_ref in input {

let val = *val_ref;

if val < 0 { out.push(-val); count += 1; }

}

return count;

}(silly? well ... slides are strawmen succinct)

Sample usage of gather_negs

let values = [-1, 2, 3, -4, -5, -6, -7, -8, -9, 10];

let mut input = Vec::new();

input.extend_from_slice(&values); // put `values` into `input` vector

let mut myvector = Vec::new();

gather_negs(&input, &mut myvector); // gather neg vals of `input` into `myvector`can make similar code for C++ and Java, e.g.:

int values[] = { -1, 2, 3, -4, -5, -6, -7, -8, -9, 10};

std::vector<int> input(values, values + sizeof(values) / sizeof(int));

std::vector<int> myvector;

gather_negs(input, myvector); // gather neg vals of `input` into `myvector`(Same for Rust, C++ and Java). So why is Rust special?

More C++ gather_negs

int values[] = { -1, 2, 3, -4, -5, -6, -7, -8, -9, 10};

std::vector<int> input(values, values + sizeof(values) / sizeof(int));

std::vector<int> myvector = input;

gather_negs(myvector, myvector); // gather neg vals of `myvector` into `myvector`How does this behave?

Might expect:

Reality (for C++) is:

or:

or:

Segmentation fault: 11C++ and Undefined Behavior

C++: Bugs yield Undefined Behavior

> for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

> auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

> if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

> out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

> for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

> auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

> if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

> for (auto it = input.begin(); it != input.end(); ++it) {

> auto val = *it;

> if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

> for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

> auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

> if (val < 0) {

out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

> out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

> out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

> out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

> out.push_back(-val);

}

}C++: Bugs yield Undefined Behavior

> for (auto it = input.begin(); it != input.end(); ++it) {

auto val = *it;

if (val < 0) {

out.push_back(-val);

}

}C++ chose speed over safety

Iterator invalidation yields undefined behavior

Java has Iterator Invalidation too

Analogous code in Java

ArrayList<Integer> myvector = new ArrayList<Integer>(input);

myvector.addAll(Arrays.asList(values));

gather_negs(myvector, myvector);yields ConcurrentModificationException at runtime.

(So Java remains safe; its collection code dynamically checks to detect iterator invalidation. Programmer's bug is detected at runtime.)

Rust

Analogous code in Rust

let mut myvector = Vec::new();

myvector.extend(values.iter());

gather_negatives(&myvector,

&mut myvector );yields:

error: cannot borrow `myvector` as mutable

because it is also borrowed as immutable

--> examples/vex.rs:18:27

|

17 | gather_negatives(&myvector,

| -------- immutable borrow occurs here

18 | &mut myvector );

| ^^^^^^^^ - immutable borrow ends here

| |

| mutable borrow occurs here

error: aborting due to previous errorat compile-time.

Safe code means no undefined behavior

- Compiler rejects code that does unsafe things, when feasible

- Developer must explicitly opt into use of

unsafeconstructs, like raw pointer addresses

- Some things checked at runtime (e.g. array bounds checks); errors here yield "panics"

- No undefined behavior; but unwinds/aborts are allowed

Let's Jump In: Parallelism

Example: Sum of (even) numbers in C++

Core function to add the even values in an integer range.

uint64_t do_partial_sum(int start, int to_do) {

uint64_t result = 0;

for (int i = start; i < start + to_do; i++) {

if (i % 2 == 0) { // avoid compiler reduction to closed form solution

result += i;

}

}

return result;

}Parallelize it: Divide and conquer!

void thread_work(uint64_t *result, int start, int to_do) {

*result += do_partial_sum(start, to_do);

}

int main(int argc, char *argv[]) {

uint64_t result;

/* ... */

std::vector<std::thread *> threads;

result = 0;

for (int start_val = 0, i = 0;

start_val < max;

start_val += sum_per_thread, i++) {

threads.push_back(new std::thread(thread_work,

&result,

start_val,

sum_per_thread));

}

for (int i = 0; i < thread_count; i++) {

threads[i]->join();

}

/* ... */

}Let's try it!

(I know you're aching to see more Rust; we'll get to it!)

Let's try our C++ code

| threads | average time | notes |

|---|---|---|

| 1 | (TBD) | |

| 4 | (TBD) | |

| 1000 | (TBD) |

Four runs, 1 thread

Let's try our C++ code

| threads | average time | notes |

|---|---|---|

| 1 | 0.44004075 | |

| 4 | (TBD) | |

| 1000 | (TBD) |

Four runs, 1 thread

Let's try our C++ code

| threads | average time | notes |

|---|---|---|

| 1 | 0.44004075 | |

| 4 | (TBD) | |

| 1000 | (TBD) |

Four runs, 4 threads

Let's try our C++ code

| threads | average time | notes |

|---|---|---|

| 1 | 0.44004075 | |

| 4 | 0.15776775 | |

| 1000 | (TBD) |

Four runs, 4 threads

Let's try our C++ code

| threads | average time | notes |

|---|---|---|

| 1 | 0.44004075 | |

| 4 | 0.15776775 | |

| 1000 | (TBD) |

Four runs, 1000 threads

Let's try our C++ code

| threads | average time | notes |

|---|---|---|

| 1 | (but | |

| 4 | its all | |

| 1000 | bogus!) |

Four runs, 1000 threads

Data Race!

Data Race!

void thread_work(uint64_t *result, int start, int to_do) {

*result += do_partial_sum(start, to_do);

// ~~~~~~~

}

int main(int argc, char *argv[]) {

uint64_t result;

/* ... */

std::vector<std::thread *> threads;

result = 0;

for (int start_val = 0, i = 0;

start_val < max;

start_val += sum_per_thread, i++) {

auto result_ptr = &result;

// ~~~~~~~

threads.push_back(new std::thread(thread_work,

result_ptr,

start_val,

sum_per_thread));

}

for (int i = 0; i < thread_count; i++) {

threads[i]->join();

}

/* ... */

}Analogous code in Rust

fn do_partial_sum(start: isize, to_do: isize) -> u64 {

let mut result: u64 = 0;

for i in start..(start + to_do) {

if i % 2 == 0 {

result += i as u64;

}

}

return result;

}or if you prefer

fn do_partial_sum_alt(start: isize, to_do: isize) -> u64 {

(start..(start + to_do)).filter(|i| i % 2 == 0).map(|i| i as u64).sum()

}Parallelized

(Attempt at analogous code, now in Rust.)

let mut threads = Vec::new();

result = 0;

::crossbeam::scope(|scope| {

let mut start_val = 0;

while start_val < max {

let result_ptr = &mut result;

threads.push(scope.spawn(move || {

*result_ptr += do_partial_sum(start_val, sum_per_thread);

}));

start_val += sum_per_thread;

}

for thread in threads {

thread.join();

}

});No data races allowed!

error: cannot borrow `*result` as mutable more than once at a time

--> src/a01_opening.rs:963:31

|

960 | ::crossbeam::scope(|scope| {

...

962 | while start_val < max {

|

963 | let result_ptr = &mut result;

| ^^^^^^

| |

| second mutable borrow occurs here

| first mutable borrow occurs here

...

972 | });

| - first borrow ends here

error: aborting due to previous errorEliminating the race

- In both C++ and in Rust, you can easily eliminate the data race

- common approach: can wrap a mutex around

result - better: use atomic operation (e.g. compare-and-swap, fetch-and-add)

- better still: use separate result receiver for each thread, then add those up at end.

Point is: Rust forces you to resolve this bug

C++ allows silent, scheduler dependent failure (which may arise only rarely)

Why ...? (continued)

Why would Mozilla sponsor Rust?

- Hard to prototype research-y browser changes atop C++ code base

- Rust ⇒ Servo, WebRender

- Want Rust for next-gen infrastructure (services, IoT)

"Our mission is to ensure the Internet is a global public resource, open and accessible to all. An Internet that truly puts people first, where individuals can shape their own experience and are empowered, safe and independent."

- "accessible to all": IMO, Rust may "bring system programming to the masses"

Where is Rust now?

Where is Rust now?

1.0 release was back in May 2015

Rolling release cycle (up to Rust 1.16 as of March 16nd 2017)

Open source from the begining

https://github.com/rust-lang/rust/Open model for future change (RFC process)

https://github.com/rust-lang/rfcs/Awesome developer community (~1,400 people in

#rust, ~300 people in#rust-internals, ~1,900 unique commiters to rust.git)

Talk plan

Talk plan

- Demonstration, "Why Rust"

- "Ownership is easy" (... or is it?)

- Sharing (Data) between Threads

- Sharing (Libraries) between People

some (white) lies: "Rust is just about ownership"

"Ownership is intuitive"

"Ownership is intuitive"

Let's buy a car

let money: Money = bank.withdraw_cash();"Ownership is intuitive"

Let's buy a car

let money: Money = bank.withdraw_cash();

let my_new_car: Car = dealership.buy_car(money);money transferred into dealership, and car transferred to us.

let second_car = dealership.buy_car(money); // <-- cannot reuse"Ownership is intuitive"

let money: Money = bank.withdraw_cash();

let my_new_car: Car = dealership.buy_car(money);

// let second_car = dealership.buy_car(money); // <-- cannot reuse

my_new_car.drive_to(home);

garage.park(my_new_car);my_new_car.drive_to(...) // doesn't work either (reuse again)(can't drive car without access to it, e.g. taking it out of the garage)

"Ownership is intuitive"

let money: Money = bank.withdraw_cash();

let my_new_car: Car = dealership.buy_car(money);

// let second_car = dealership.buy_car(money); // <-- cannot reuse

my_new_car.drive_to(home);

garage.park(my_new_car);

// my_new_car.drive_to(...) // doesn't work either (reuse again)

let my_car = garage.unpark();

my_car.drive_to(work);...reflection time...

Ownership is intuitive?

Correction: Ownership is intuitive, except for programmers ...

(copying data like integers, and characters, and .mp3's, is "free")

... and anyone else who names things

Über Sinn und Bedeutung

("On sense and reference" -- Gottlob Frege, 1892)

If ownership were all we had, car-purchase slide seems nonsensical

my_new_car.drive_to(home);Does this transfer home into the car?

Do I lose access to my home, just because I drive to it?

We must distinguish an object itself from ways to name that object

Above,

homecannot be (an owned)Homehomemust instead be some kind of reference to aHome

So we will need references

We can solve any problem by introducing an extra level of indirection

-- David J. Wheeler

a truth: Ownership is important

Ownership is important

| Ownership enables: | which removes: |

|---|---|

| RAII-style destructors | a source of memory leaks (or fd leaks, etc) |

| no dangling pointers | many resource management bugs |

| no data races | many multithreading heisenbugs |

Do I need to take ownership here, accepting the associated resource management responsibility? Would temporary access suffice?

Good developers ask this already!

Rust forces function signatures to encode the answers

(and they are checked by the compiler)

Sharing Data: Ownership and References

Rust types

Generic Types, Bounded Polymorphism

fn choose<T>(x: T, y: T) -> T { return x; }

fn sum<T: Add>(x: T, y: T) -> T::Output { return x + y; }

fn sum3<T>(x: T, y: T, z: T) -> T where T: Add<Output=T> { return x + y + z; }Type Constructions

| Move | Copy | Copy if T:Copy |

|---|---|---|

Vec<T>, String, ... |

i32, char, ... |

[T; n], (T1,T2,T3), ... |

References to data

&mut T,&T(plus library reference types:

Box<T>,Cow<T>,Rc<T>, ...)

Why multiple &-reference types?

Distinguish exclusive access from shared access

Enables safe, parallel API's

| Ownership | T |

|

| Exclusive access | &mut T |

("mutable") |

| Shared access | &T |

("read-only") |

A Metaphor

&mut: can I borrow the car?

fn borrow_the_car_1() {

let mut christine = Car::new();

{

let car_keys = &mut christine;

let arnie = invite_friend_over();

arnie.lend(car_keys);

} // end of scope for `arnie` and `car_keys`

christine.drive_to(work); // I still own the car!

}But when her keys are elsewhere, I cannot drive christine!

fn borrow_the_car_2() {

let mut christine = Car::new();

{

let car_keys = &mut christine;

let arnie = invite_friend_over();

arnie.lend(car_keys);

christine.drive_to(work); // <-- compile error

} // end of scope for `arnie` and `car_keys`

}Owner loses capabilities attached to &mut-borrows only temporarily (*)

(*): "Car keys" return guaranteed by Rust; sadly, not by physical world

Slices: borrowing parts of an array

Basic Vec<B>

let mut a = Vec::new();

for i in 0..n { a.push(B::new()); }(a has read and write capabilities)

Immutable borrowed slices: &[T]

let mut a = Vec::new();

for i in 0..n { a.push(B::new()); }

let r1 = &a[0..3];

let r2 = &a[7..n-4];(a has only read capability now; shares it with r1 and r2)

Safe overlap between &[..]

let mut a = Vec::new();

for i in 0..n { a.push(B::new()); }

let r1 = &a[0..7];

let r2 = &a[3..n-4];Basic Vec<B> again

(a has read and write capabilities)

Mutable slice: &mut [T]

let w = &mut a[0..n];(a has no capabilities; w now has read and write capability)

Mutable disjoint slices

let (w1,w2) = a.split_at_mut(n-4);(w1 and w2 share read and write capabilities for disjoint portions)

Shared Ownership

Shared Ownership

let rc1 = Rc::new(B::new());

let rc2 = rc1.clone(); // increments ref-count on heap-alloc'd value(rc1 and rc2 each have read access; but neither can statically assume exclusive (mut) access, nor can they provide &mut borrows without assistance.)

Sharing Work: Parallelism / Concurrency

Threading APIs (plural!)

std::threadcrossbeam: Lock-Free Abstractions, Scoped "Must-be" Concurrencyrayon: Scoped Fork-join "Maybe" Parallelism (inspired by Cilk)

(Only the first comes with Rust out of the box)

std::thread

fn spawn_them(messages: Vec<String>) -> Vec<JoinHandle<usize>> {

let mut handles = Vec::new();

for mut msg in messages {

handles.push(std::thread::spawn(move || {

msg.push_str(", isn't that special?");

return msg.len();

}));

}

return handles;

}- Joining

JoinHandle<T>returns value (T) from thread's closure (|| { ... })

- Handles move like other values (can even be sent between threads)

- Compiler assumes nothing of extents (

threadKmay outliveparent)

What if sole ownership does not suffice?

Example: two threads both must read from one heap-allocated vector. That Vec must survive as long as the threads.

One approach: shared ownership

fn demo_sharing() {

let mut v = Vec::new();

v.push("Hello"); v.push(" "); v.push("World!");

// transfer vector `v` to heap-allocated (and ref-counted) storage

let rc1 = Rc::new(v);

let rc2 = rc1.clone(); // increments ref-count on heap-alloc'd value

}Problem solved?

fn failed_sharing_cross_threads() {

let mut v = Vec::new();

v.push("Hello"); v.push(" "); v.push("World!");

// transfer vector `v` to heap-allocated (and ref-counted) storage

let rc1 = Rc::new(v);

let rc2 = rc1.clone(); // increments ref-count on heap-alloc'd value

let h1 = std::thread::spawn(move || { rc1[0].len() });

let h2 = std::thread::spawn(move || { rc2[2].len() });

h1.join(); h2.join();

}error: the trait bound `Rc<Vec<&str>>: Send` is not satisfied in `[closure]`

--> src/b01_concurrency_demos.rs:126:14

|

126 | let h1 = std::thread::spawn(move || { rc1[0].len() });

| ^^^^^^^^^^^^^^^^^^ within `[closure]`, the trait `Send` is

| not implemented for `Rc<Vec<&str>>`

|

= note: `Rc<Vec<&str>>` cannot be sent between threads safely

= note: required because it appears within the type `[closure]`

= note: required by `std::thread::spawn`Issue

Rc<T> uses non-atomic updates for ref-count maintenance

Easily fixed: if you need to share ownership across threads, then use Arc<T> instead.

fn demo_sharing_cross_threads() {

let mut v = Vec::new();

v.push("Hello"); v.push(" "); v.push("World!");

// transfer vector `v` to heap-allocated (and ref-counted) storage

let arc1 = Arc::new(v);

let arc2 = arc1.clone(); // increments ref-count on heap-alloc'd value

let h1 = std::thread::spawn(move || { arc1[0].len() });

let h2 = std::thread::spawn(move || { arc2[2].len() });

h1.join(); h2.join();

}Illustrating Arc

More general idea

Rc<T>vsArc<T>is (an important) detailFundamental concept is deeper

Traits

SendandSynccontrol cross-thread capabilities

T: Sendimplies ownership ofTcan be tranferred across threads

T: Syncimplies a reference toT(e.g.&T,Arc<T>) can be shared across threads

Sendenables Message Passing style concurrency, via channels

Syncenables Shared State style concurrency; onlyTwith synchronized access is allowed

Any other solutions to this besides shared ownership?

Yes; the best ones are in crates outside Rust stdlib.

crossbeam

provides lock-free data structures

and a scoped threading abstraction

upholds Rust's safety (data-race freedom) guarantees

scoped threading?

std::thead does not allow sharing stack-local data

fn std_thread_fail() {

let array: [String; 3] = [format!("hello"), format!(" "), format!("world")];

for string_ref in &array {

std::thread::spawn(move || {

let r: &String = string_ref;

println!("element: {}", r);

});

}

} // end of `array` scope; it is popped and strings are deallocatederror: `array` does not live long enough

--> src/b01_concurrency_demos.rs:92:24

|

92 | for string_ref in &array {

| ^^^^^ does not live long enough

...

98 | } // end of `array` scope; it is popped and strings are deallocated

| - borrowed value only lives until here

|

= note: borrowed value must be valid for the static lifetime...crossbeam scoped threading

fn crossbeam_demo() {

let array: [String; 3] = [format!("hello"), format!(" "), format!("world")];

::crossbeam::scope(|scope| {

for string_ref in &array {

let r: &String = string_ref;

scope.spawn(move || {

println!("element: {}", r);

});

}

});

}::crossbeam::scope enforces parent thread joins on all spawned children before returning

- ensures child threads can soundly access local refs they receive

crossbeam scope: "must-be concurrency"

Each scope.spawn(..) invocation fires up a fresh thread

(Literally just a wrapper around std::thread)

crossbeam scope: "must-be concurrency"

vs rayon: "may-be parallelism"

rayon demo 1: map reduce

Sequential

fn demo_map_reduce_seq(stores: &[Store], list: Groceries) -> u32 {

let total_price = stores.iter()

.map(|store| store.compute_price(&list))

.sum();

return total_price;

}Parallel (potentially)

fn demo_map_reduce_par(stores: &[Store], list: Groceries) -> u32 {

let total_price = stores.par_iter()

.map(|store| store.compute_price(&list))

.sum();

return total_price;

}Rayon's Rule

the decision of whether or not to use parallel threads is made dynamically, based on whether idle cores are available

i.e., solely for offloading work, not for when concurrent operation is necessary for correctness

(uses work-stealing under the hood to distribute work among a fixed set of threads)

rayon demo 2: quicksort

fn quick_sort<T:PartialOrd+Send>(v: &mut [T]) {

if v.len() > 1 {

let mid = partition(v);

let (lo, hi) = v.split_at_mut(mid);

rayon::join(|| quick_sort(lo),

|| quick_sort(hi));

}

}fn partition<T:PartialOrd+Send>(v: &mut [T]) -> usize {

// see https://en.wikipedia.org/wiki/

// Quicksort#Lomuto_partition_scheme

...

}rayon demo 2: quicksort

fn quick_sort<T:PartialOrd+Send>(v: &mut [T]) {

if v.len() > 1 {

let mid = partition(v);

let (lo, hi) = v.split_at_mut(mid);

rayon::join(|| quick_sort(lo),

|| quick_sort(hi));

}

}rayon demo 2: quicksort

fn quick_sort<T:PartialOrd+Send>(v: &mut [T]) {

if v.len() > 1 {

let mid = partition(v);

let (lo, hi) = v.split_at_mut(mid);

rayon::join(|| quick_sort(lo),

|| quick_sort(hi));

}

}"Recursion is simple", right (?)

rayon demo 3: buggy quicksort

fn quick_sort<T:PartialOrd+Send>(v: &mut [T]) {

if v.len() > 1 {

let mid = partition(v);

let (lo, hi) = v.split_at_mut(mid);

rayon::join(|| quick_sort(lo),

|| quick_sort(hi));

}

}fn quick_sort<T:PartialOrd+Send>(v: &mut [T]) {

if v.len() > 1 {

let mid = partition(v);

let (lo, hi) = v.split_at_mut(mid);

rayon::join(|| quick_sort(lo),

|| quick_sort(lo));

// ~~ data race!

}

}(See blog post "Rayon: Data Parallelism in Rust" bit.ly/1IZcku4)

Big Idea

3rd parties identify (and provide) new abstractions for concurrency and parallelism unanticipated in std lib.

Sharing Code: Cargo

Sharing Code

std::thread is provided with std lib

But crossbeam and rayon are 3rd party

What is Rust's code distribution story?

Cargo

cargo is really simple to use

cargo new -- create a project

cargo test -- run project's unit tests

cargo run -- run binaries associated with project

cargo publish -- push project up to crates.ioEdit the associated Cargo.toml file to:

- add dependencies

- specify version / licensing info

- conditionally compiled features

- add build-time behaviors (e.g. code generation)

"What's this about publishing to crates.io?"

crates.io

Open-source crate distribution site

Has every version of every crate

Cargo adheres to semantic versioning ("semver")

Semver

The use of Semantic Versioning in cargo basically amounts to this:

Major versions (MAJOR.minor.patch) are free to break whatever they want.

New public API's can be added with minor versions updates (major.MINOR.patch), as long as they do not impose breaking changes.

In Rust, breaking changes includes data-structure representation changes.

Adding fields to structs (or variants to enums) can cause their memory representation to change.

Why major versions can include breaking changes

Cargo invokes the Rust compiler in a way that salts the symbols exported by a compiled library.

This ends up allowing two distinct (major) versions of a library to be used simultaneously in the same program.

This is important when pulling in third party libraries.

In Practice

If you (*) follow the sem.ver. rules, then you do not usually have to think hard about the crates you (recursively) import.

"you" is really "you and all the crates you use"

- You may not believe me, but

cargois really simple to use - Coming from a C/C++ world, this feels like magic

- (probably feels like old hat for people used to package dependency managers)

Final Words

So much more!

Touched on things like Send and Sync, but there is more to Rust's secret sauce

- Lifetimes

- Object-orientation via trait-references

- Unboxed closures

Lots of technology (and still evolving!)

Customers

See https://www.rust-lang.org/en-US/friends.html

Mozilla (of course)

Dropbox (see "Wired" article on exodus from Amazon cloud)

MaidSafe

Pivot from C/C++ to Rust

MaidSafe is one example of this

Rust as enabler of individuals

From "mere script programmer"

to "lauded systems hacker"

Programming in Rust has made me look at C++ code in a whole new light

Thanks

www.rust-lang.org |

Hack Without Fear |